MPI¶

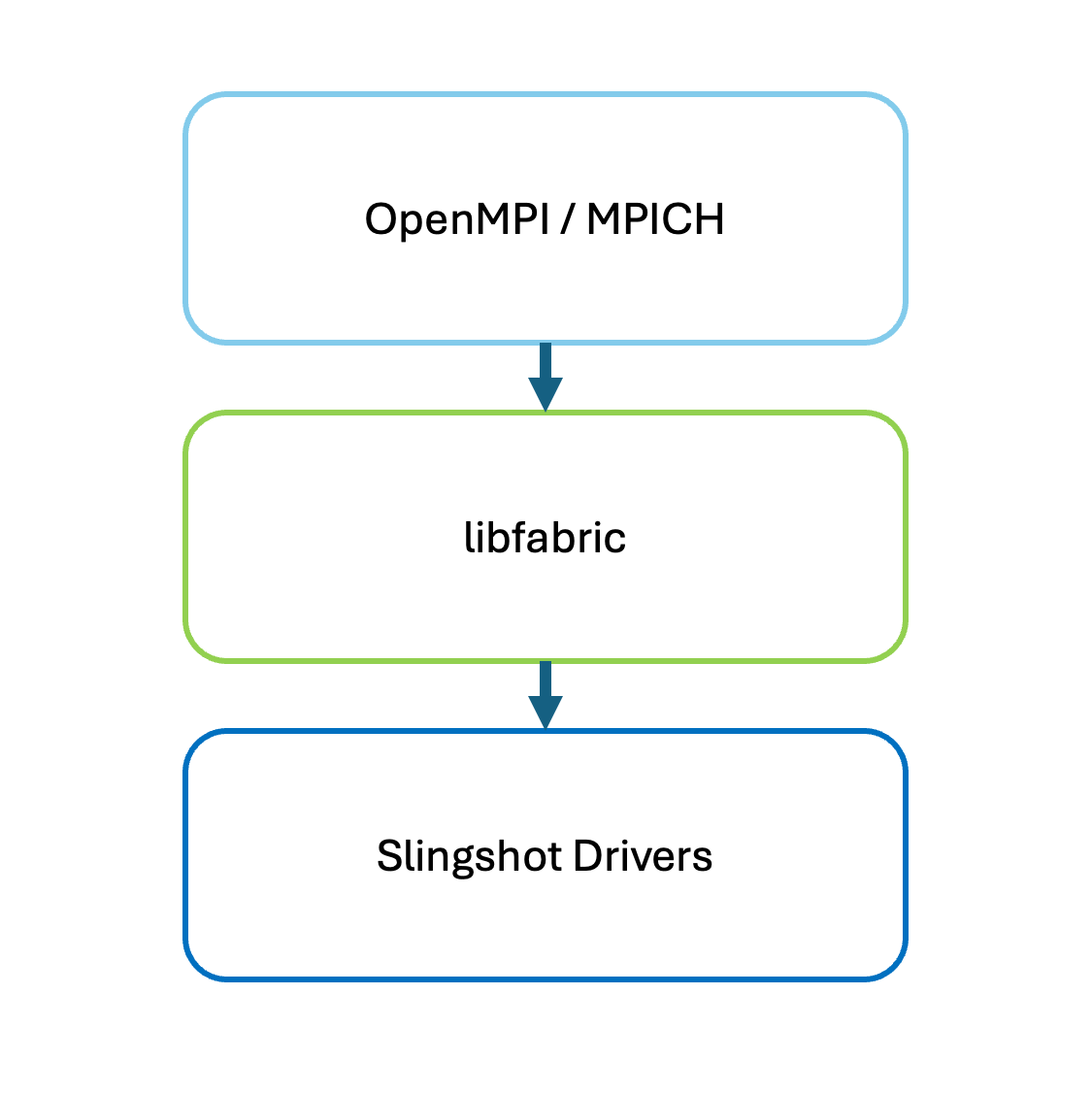

Message Passing Interface (MPI) is a standardized and portable communication protocol designed for parallel computing. For MPI to optimally work across a compute cluster, it must be able to communicate with the Network Interface Cards (NICs) and Switches. Isambard-AI is an HPE Cray EX system and Isambard 3 is a HPE Cray XD system, both based on the Slingshot 11 (SS11) interconnect.

For MPI to run on Isambard-AI or Isambard 3 it first needs to communicate with the standardised Fabric API provided through the libfabric module and libraries. libfabric will translate MPI commands to the Slingshot drivers.

Don't use mpirun or mpiexec

To execute your mpi_app you will have to implicitly launch it with srun as opposed to mpirun or mpiexec. This is so you can provide the correct PMI type with the --mpi flag.

Default MPI library (Cray MPICH)¶

Isambard supercomputers are built by HPE Cray and are based on the Slingshot interconnect. The Cray MPICH library is available for building MPI applications.

This MPI is provided by the cray-mpich module and is loaded as part of the Cray Programming Environment (CPE / PrgEnv-cray).

For instance, you can list the modules after loading PrgEnv-cray:

$ module list

Currently Loaded Modules:

1) brics/userenv/2.3 2) brics/default/1.0

$ module load PrgEnv-cray

$ module list

Currently Loaded Modules:

2) brics/userenv/2.3 5) craype-arm-grace 9) cray-mpich/8.1.28

3) brics/default/1.0 6) libfabric/1.15.2.0 10) PrgEnv-cray/8.5.0

4) cce/17.0.0 7) craype-network-ofi

5) craype/2.7.30 8) cray-libsci/23.12.5

After loading a programming environment you can see the compiler link flags with mpicc -show:

$ mpicc -show

craycc -I/opt/cray/pe/mpich/8.1.28/ofi/cray/17.0/include -L/opt/cray/pe/mpich/8.1.28/ofi/cray/17.0/lib -lmpi_cray

Here you can see that the the Cray MPI library -lmpi_cray will be linked

MPICH

Support for aarch64 in Cray MPICH was recently added and it is recommended to set some environment variables to avoid known issues in the current release.

Please see the Known Issues page for current advice on setting variables.

MPICH is also available through the GNU Programming environment PrgEnv-gnu:

$ module load PrgEnv-gnu

$ mpicc -show

gcc -I/opt/cray/pe/mpich/8.1.28/ofi/gnu/12.3/include -L/opt/cray/pe/mpich/8.1.28/ofi/gnu/12.3/lib -lmpi_gnu_123

PMI (Process Manager Interface)¶

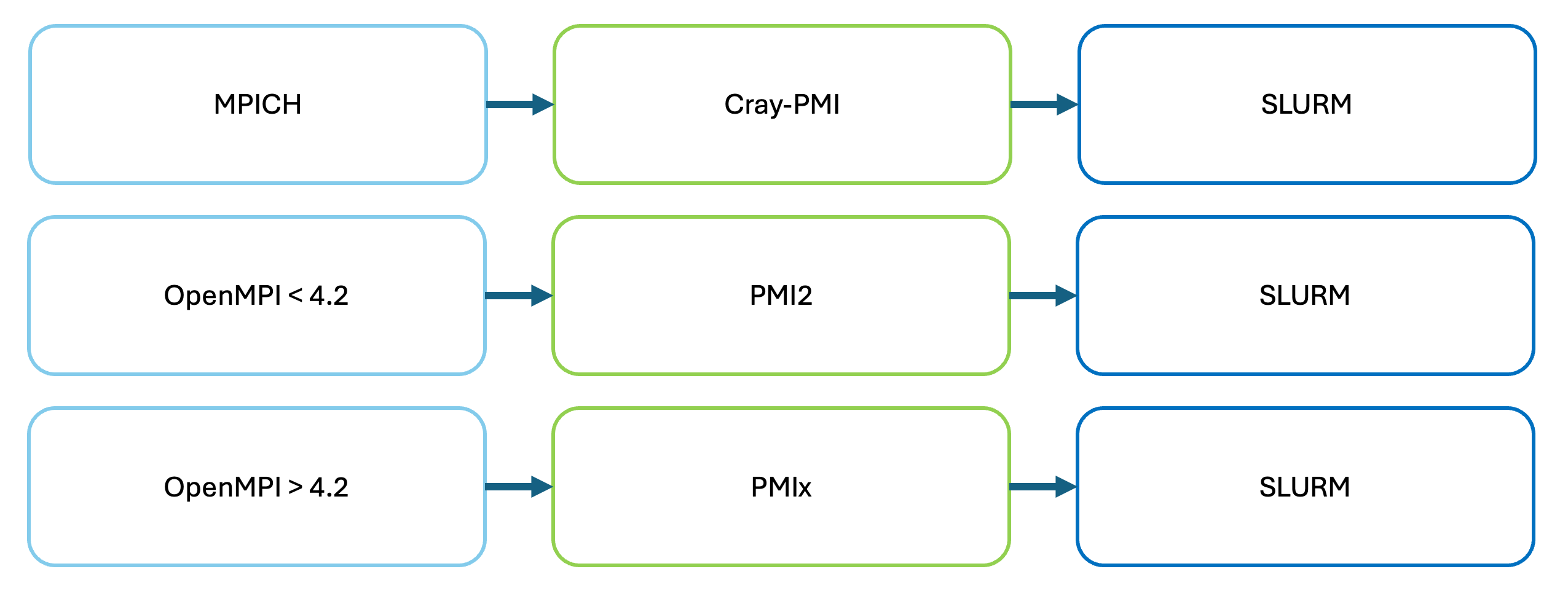

MPI processes need to communicate with the process manager (Slurm) to link the processes and decide ranks between the nodes. Many MPI versions separate the process management functions from the MPI implementation. For this a Process Management Interface (PMI) is required.

PMI for Cray MPICH

If you are using the default MPI library (Cray MPICH), then you should not need to specify the PMI type. If you are using an a different MPI library you have installed yourself, such as OpenMPI, you may need to use a different PMI type to the default.

PMI Types¶

To list the different PMIs available with Slurm you can run the following command:

$ srun --mpi=list

MPI plugin types are...

cray_shasta

none

pmi2

pmix

specific pmix plugin versions available: pmix_v4

If you are using Cray MPI (MPICH) you can use cray_shasta which is the default option.

$ srun --mpi=cray_shasta mpi_app

If you are using an older version (<=v4.0) of OpenMPI you should use the pmi2 option:

$ srun --mpi=pmi2 mpi_app

If you are using modern version (>=v5.0) of OpenMPI you should use the pmix option:

$ srun --mpi=pmix mpi_app

Installing Different MPI Versions¶

You are welcome to build your own MPI versions from source on Isambard-AI or Isambard 3. However, conda can provide an easy method of installing MPI versions. Please have a look at our Conda instructions to get going with Conda Miniforge.

Install MPI with conda¶

conda install -c conda-forge mpich

conda install -c conda-forge openmpi

Installing OpenMPI from source¶

Please also consult the following documentation for installing OpenMPI from source: